Beyond the Hype How to Create a Competitive Edge with AI

May 2024

Straight To The Point

Artificial Intelligence (AI) is transforming the financial sector, with investments expected to grow significantly, emphasizing its role in competitive differentiation. The success of AI projects hinges on understanding the complexities of costs, the importance of building reusable assets, and the strategic implementation of an AI assessment framework to maximize ROI. But this isn't always easy in financial services: given the regulatory challenges, firms must have effective strategies for responsible AI deployment.

AI is Becoming Table Stakes

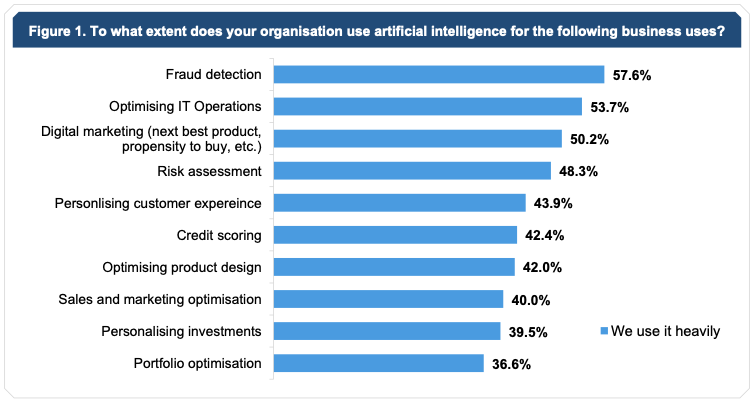

Total spending on AI in financial services has steadily increased over the last several years and that trend is expected to accelerate at an ~30% CAGR, boosting total spending from an estimated $35 billion in 2023 to a projected ~$100 billion by 2027. Broadly speaking, most financial services firms have at a minimum begun experimenting with artificial intelligence, with over 50% leveraging Artificial Intelligence (AI) in areas such as fraud detection, IT operations, and digital marketing. In an environment where all firms are racing to utilize AI to provide clients with innovative products, improve bottom line, and gain market share, the winners will be those that understand the requirements for AI modeling and can harness their existing data and technology environments to support a steady pipeline for the increasing list of applicable AI use cases.

Key Investment: AI Assessment Framework

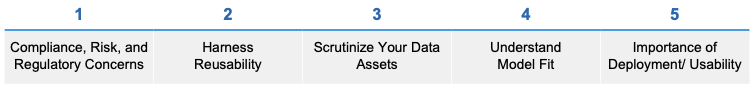

70% of all projects regardless of type fail and, as of year-end 2023, up to 85% of AI-related projects failed (70% of projects fail, 85% of projects fail ). While all-in cost to build an AI model will vary depending on several factors, such as dataset size, model complexity, computing power needed, and training requirements, OpenAI predicts the overall cost of an AI model to increase between 4-5x by 2030. With costs high and projected to rise, successful firms will be those who intimately understand the cost structures of an AI model, how to build reusable assets, and how to extract value. Thankfully, most AI projects follow a similar approach that can be deconstructed to create a reusable framework to assess a use case’s costs, reusable components, potential benefits, and ultimately ROI. Throughout this piece, we will discuss several relevant topics to consider when creating an AI Assessment Framework and building out an AI pipeline, such as:

Compliance, Risk, and Regulatory Concerns

For the first time, the Financial Stability Oversight Council (Council) included a section outlining the risks posed by AI in its 2023 annual report, published in December. The Council, which is chaired by Treasury Secretary Yellen and includes all major US regulators, recommends that all financial institutions “ensure that oversight structures [in relation to AI] keep up with or stay ahead of emerging risks to the financial system.” The Council also references a recommendation, and perhaps a hint, that supervisory authorities further “build out expertise and capacity to monitor AI innovation and usage and identify emerging risks.”

As AI continues to become more prevalent throughout the Financial Services sector, regulators will undoubtedly ramp-up oversight, which could significantly slow down progress. Over time, we foresee a ‘use case dependent’ sliding scale approach being adopted, where certain use cases require more scrutiny than others. “Scrutiny levers” will likely include sensitivity of data, explainability needs, and risk of human fault. For instance, using an AI model to make loan decisions will require a very high level of scrutiny and oversight, while using AI for process automation in middle-office operations will have significantly less. Additionally, a large language model trained on sensitive data, such as PII, is inherently at risk of inappropriately revealing that data and, therefore, will and should have additional infosec requirements. This risk can be partially mitigated by obfuscating data prior to modeling.

Harness Reusability

While regulators will likely slow the pace of innovation in an effort to reduce risk, the best way firms will be able to maintain innovation velocity as well as regulator confidence will be through reusability of assets. When assessing a library of AI use cases, heavy weight should be given to those use cases that will enable reusable asset creation across the data itself, the data pipeline, modeling, and/or integration. Top firms will utilize continued investments in AI infrastructure to bring their cost-basis on future projects down and, therefore, open up their ability to chase additional use cases. For a deeper dive into Wealth Management-specific use cases, see our in-depth article “Prioritizing Pivotal AI Use Cases within Wealth Management.”

Scrutinize your Data Assets

Once a problem statement has been defined, it is important to gauge what data sets will be required to build the model and evaluate the state of each data set, including existing and net new assets. Evaluating existing data assets requires additional scrutiny as the “garbage in, garbage out” concept becomes exponentially more problematic when compounded with the current inability to fully solve interpretability and explainability of complex models. While solving the black box paradox is a larger problem, organizations can increase the explainability of their data assets, which will in turn increase confidence in the models. Net new assets, which are completely new data sets or existing data with alterations, will require significant effort to ensure quality, but also to ensure the new asset doesn’t cause friction with the existing pipeline. Additionally, it is highly likely that there will be a core set of data that will be reused consistently across multiple models, and therefore, if built appropriately can be reused (e.g., core reference data, common transaction data). With that in mind, below are some leverageable questions to consider when building out a framework:

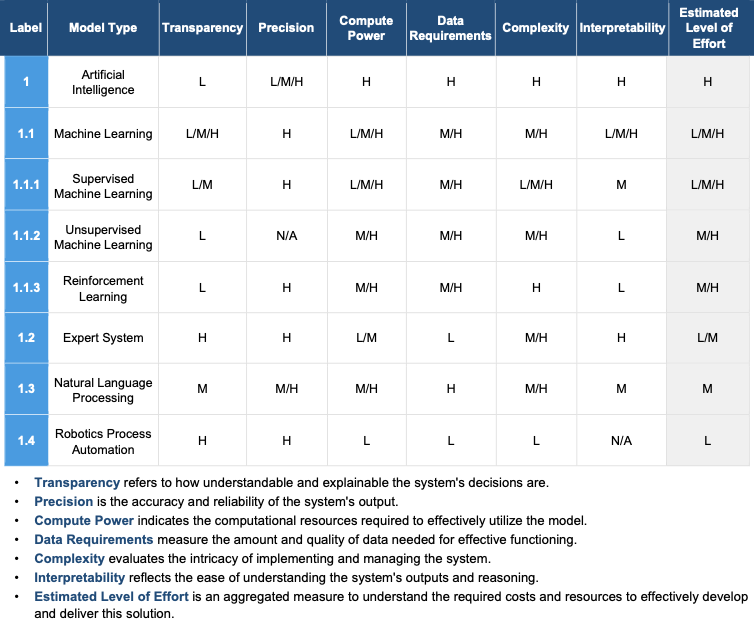

Understand Model Fit

A typical data scientist will explore multiple algorithm options while testing the viability of a single AI use case; this may make it seem unpredictable to gauge the ongoing costs prior to some level of implementation, but model algorithm types can be assessed in the aggregate to at a minimum provide a comparative cost in relation to each other. By creating and applying a flexible framework that teases out the underlying requirements of the use case in question and mapping it against the most applicable (or top x) model algorithm types, it becomes possible to estimate costs within a reasonable margin of error. Some example questions to tease out the correct algorithm include:

- Is the use case generally focused on predictive capabilities, processing power, or automation?

- What level of risk and regulatory scrutiny is expected? Is high transparency and/or interpretability a requirement?

- What is the size of the available data set? What is available in terms of compute resources?

If the organization has a low risk profile and limited resources, the range of solutions may be limited to supervised machine learning and robotics process automation opportunities. Alternatively, more established data science organizations may have a wider range that includes the full scope of machine learning tools along with natural language processing techniques.

Importance of Deployment/Usability

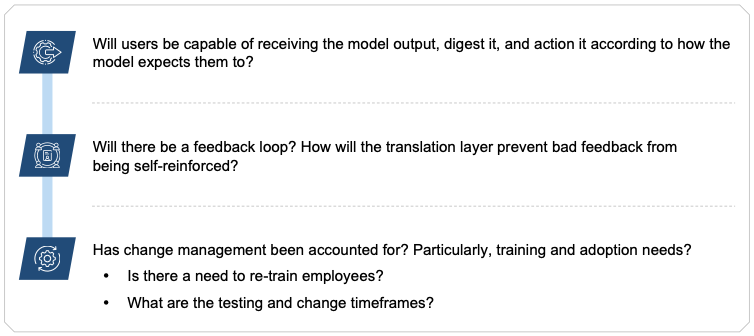

The perfect AI model is only useful when it is implemented and deployed where it can be executed as part of the process, user experience or customer experience for which it is intended. The environment where a model is required to be deployed often has more stringent performance, resiliency, and security requirements. Most likely, the model will need to be integrated with existing Customer and/or Employee UI systems and databases. This integration is often underestimated and perhaps left until late in the lifecycle leading to additional costs (development and runtime) and project delays impacting the business outcome.

Key questions to ask early in the development process:

- In what systems/channels will the model be deployed?

- What are the response time requirements?

- What data is required for the model execution and is that data required to execute the model available in those channels?

- Will an API set need to be developed?

- Will execution of the model be required or optional? If the model and data are not available with the system/channel continue to function or is it 100% mission critical?

- Must the model execute in real-time? Or can it execute ‘off-line’ with the results made available in the real-time experience?

In addition to the technical aspects, how the output of the AI model will be manifested in a meaningful and clear way to the users must be considered. There is often a need to incorporate a translation layer to facilitate user adoption. When building out the mechanism(s) to manifest the model outputs, such as an API set, it is important to account for the translations required to make the output understandable.

While AI projects are not and should not be treated the same as app dev projects, it is worthwhile to conduct upfront analysis to understand the costs required to go from AI to useful AI. Useful AI aims to increase adoptability of the model and decrease risk through well thought out human-computer interaction.

Final Thoughts

Many artificial intelligence and machine learning algorithms have been around since the 1950s, but because of the advent of LLMs, such as ChatGPT (which have only recently become viable due to extremely large data sets, rapid improvements in GPUs, and cloud computing), many firms will feel the need to green light their own AI models to keep pace. This is classic action bias that led to previous AI winters in the mid-1970s, late-1980s and early 2000s.

The ultimate winners will be those firms who can:

- Create a reusable pipeline to build quality AI models quickly.

- Minimize sunk costs by focusing on the highest ROI use cases.

- Properly plan and account for deployment needs to ensure the AI is useful to the business.

How RP Can Help

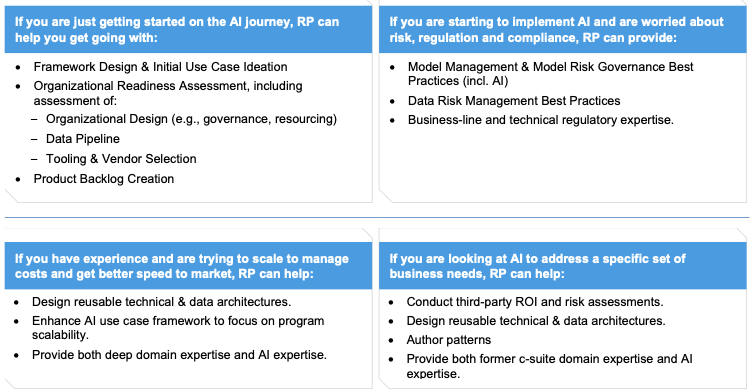

Reference Point can assist firms in the following ways:

Table of Contents

About Reference Point

Reference Point is a strategy, management, and technology consulting firm focused on delivering impactful solutions for the financial services industry. We combine proven experience and practical experience in a unique consulting model to give clients superior quality and superior value. Our engagements are led by former industry executives, supported by top-tier consultants. We partner with our clients to assess challenges and opportunities, create practical strategies, and implement new solutions to drive measurable value for them and their organizations.